All new Registrations are manually reviewed and approved, so a short delay after registration may occur before your account becomes active.

Best virtualization software for overselling RAM (KVM)?

Right now I have been using Proxmox and have come across an issue I have with overprovisioning RAM. If I create a virtual machine allocate it 40GB of RAM as long as it never peaks at 40GB of RAM it will not reserve that RAM on the node.

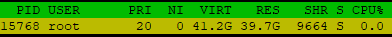

If I use the whole 40GB RAM and then stop the process and run sync; echo 3 > /proc/sys/vm/drop_caches the virtual machines after shows

free -m

total used free shared buff/cache available

Mem: 40074 1200 38842 4 31 38596

Swap: 4095 44 4051

However the Proxmox node still reserves 40GB of RAM and stays that way until I completely power off/on that VM.

KSM + Ballooning is enabled and I have tried setting lower minimal RAM in ballooning which just ends up making the virtual machine show less RAM than it should (shows the min value in free -m)

Are there any hypervisors that work differently than this for KVM machines?

Comments

Which provider do you represent?

Lol, thanks for making me realise it's their first post 🤦♂️

Well I knew this was coming before posting. Saw other threads like this with the same responses.

You guys are fine sharing CPU power but for some reason you you expect RAM to be dedicated? I am not looking to oversell RAM to the point of OpenVZ.

Why is there so much hate towards this? I don't see the point of having lots of RAM on a node and only having 20% of it in real use at any one given time and the rest of it reserved for small peak times. Overselling is not an issue, overloading is and that's not something I would do.

If you pulled all of that ^^ from my question - cool.

I simply asked which host do you represent, as obviously you're not asking from your official account, but rather from a made up one.

I don't have an official account on LET

add more swap, the node will eventually take care and swap out old pages.

you can also try setting zone_reclaim_mode to 1, might help a bit.

I am honest person

Thank you for the first on topic post will give it a go but after looking into it doesn't it require NUMA enabled?

will give it a go but after looking into it doesn't it require NUMA enabled?

Harmony-VPS?

https://letmegooglethat.com/?q=Harmony+VPS+hosting+(who+is+Harmony+really+representing)

I'd have to be pretty dumb

if I remember correctly with NUMA enabled reclaim_mode can help to avoid specific performance issues you might run into otherwise, depending on page sizes. however even without NUMA it will enforce flushing (reclaiming) page cache pages more quickly and therefore help freeing up memory.

I have that enabled on my proxmox nodes to help with balancing and also tweaked a bit on the swap usage and vfs cache. from my experience it does help if you do some reasonable overprovisioning.

of course there is no onefitsall solution and especially with swap it also tends to be a religious topic on what to use or not. probably something you need to carefully play around with for a bit.

The server gets slow if you oversell CPU

The server shuts down if you oversell RAM

Thats why we hate it.

A theoretical answer: it is generally not possible to oversell RRAM in full virtualization such as KVM.

The hypervisor may request the guest kernel to return some RAM, but the guest kernel may not understand or fulfill such requests.

The hypervisor could forcibly swap some of the allocated RAM out to SSD.

It would not cause correctness issue from guest point of view: guest would simply see a very slow memory read operation.

However, this could cause strange behavior in the guest due to unexpected timing, which leads to customer opening tickets or writing negative reviews.

The server involucrates 400 disks if you oversell storage.

The server becomes NAT if you oversell IPv4.

That's why we love it.

This also leads on to why OP did not see memory released by the guest. By default in most ballooning configurations, memory will only be requested from the guests when memory usage on the host is high.

Which other settings? The only thing I can find about vfs cache online with reclaim_mode enabled is for Ceph storage

vm.swappiness=0vm.vfs_cache_pressure=50vm.dirty_background_ratio = 5vm.dirty_ratio = 10vm.min_free_kbytes=2097152vm.zone_reclaim_mode=1vm.nr_hugepages = 400Is what I have found to be recommended on other forums.

I am using ZFS (arc limit 20GB RAM)

I have had reclaim_mode enabled for a few hours now doesn't seem to have made much difference so far

sysctl -a | grep 'reclaim' vm.zone_reclaim_mode = 1I use something like cache_pressure 200 and swappiness 10 on a system with only 16GB RAM and 24GB swap on HDDs.

I know the situation from RAM not being freed up or being reserved even after a simple reboot of a windows guest for instance. only if you stop the guest completely it seems that the pages get freed up properly.

the system however will send them to swap sooner or later if they relate to an old state of that guest that's not gonna be used anymore. using swap like a trashcan can make a lot of sense to free up memory otherwise. same goes for reclaiming page cache, as you usually have your guest cache the filesystem already, so you probably do not want the host to double up on that.

people tend to want swap clean or even turned off, because they think swapping is hurting performance. usually it doesn't but instead does exactly what you want - freeing up real memory for more important tasks. hence the recommendation to grow that part and allow for swapping.

however your system setup might be different and if you have more memory and faster disks some other combination can be better. I usually also find zfs quite ... problematic. memory taken for ARC can't be freed just by any system settings like with the regular filesystem caching/buffering.

which kind of forces double buffering on you and burning your memory even more.

as @jackb pointed out the question still might be, why the guest does not release the memory. as we do not know what the specs of your system are, could simply be that there is no need yet for the hypervisor to ask for the memory to be released?

40G guest + 20G ARC (if fully used) sum up to 60, which still leaves some room if you have like 64G at least?

you did not write about any problems you encountered with that, so maybe it's so far only a visual thing... maybe try putting a second 40G guest next to it and pressure that for memory usage and see what happens 🤷♂️

@Harmony How do you ensure guests all use up all of their memory at the same time? It's inevitable to happen at some point.

Or someone just unloads the balloon driver.

Francisco

@lentro reasonable overselling is no problem. there are multiple to factor in. you can use balloning to regain some memory as well as KSM which often works quite good, if you have a lot of similar guests.

apart from that and some ZFS ARC shenanigans you simply want to have enough swap available so that in cases where really most of the guests try to get all their memory the node can at least swap out old shit.

yes, in that case performance will decrease because of the IO and obviously this is nothing you want to have as a continous state. however within reasonable limits and depending on your use-case 20-50% overcommitment pn RAM most likely will never have you run into that situation, when the parameters are set properly.

if done wrong it would simply be a case of OOM and the hostnode would start killing processes, so guests might randomly get stopped/killed.

Yeah... If the OS sees lots of free RAM, it's going to use that for cache and buffers, particularly if there's a lot of reads from the hard drive. I don't think Linux distros have the balloon driver installed by default, and a lot of people install from ISO.

According to https://pve.proxmox.com/wiki/Dynamic_Memory_Management Modern Linux Kernels do.

Then I guess that user would just have full dedicated RAM. But that is not a good enough reason not to do it.

swap is set to 0 for our VMs and I left it as that mainly due to cloud templates being set that way.

I found this neat script to make some optimizations to Proxmox https://github.com/extremeshok/xshok-proxmox but it doesn't cover what you have suggested. swappiness is currently set to 10 (40GB total) and the node has 256GB RAM + 2x2TB NVMe

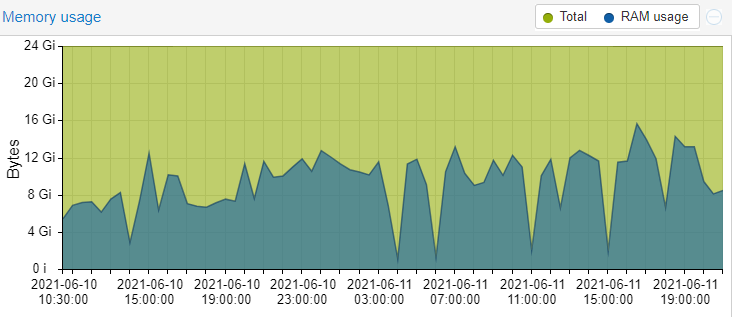

The concern I have with the way it currently is (if it's working at actually taking RAM back) is I am not sure how to determine how close I am to overloading the node.

this is an important piece of information. how much of that 256GB is actually in use?

KSM will usually only kick in over 60% usage or so and ballooning also only starts reclaiming if really needed. did you push the overall memory to it's limit already?

at some point you then should see swap being used. however, this still does not mean that the reserved memory on the process necessarily drops - simply because pages that are in swap afaik also count towards that.

again, as long as you do not run into real issues, I don't think you have any problem at all. maybe describe more what you intend to do there (like how many guests with how much RAM, which OS etc.). depending on that I can't see why some reasonable overcomittment on RAM should be problematic. probably not going to double the ram just like that, but if it's all linux guests (maybe even managed by yourself) 50-100 GB I'd consider doable. only have at least that and some buffer available as swap, just in case ...

PS: also thanks for the link, I didn't know that one and for sure look into those suggestions.

Currently it's going from 80-85% RAM usage. KSM enables at 80% by default not 60? according to https://pve.proxmox.com/wiki/Dynamic_Memory_Management - The script told me to set

KSM_THRES_COEF=10KSM_SLEEP_MSEC=10in /etc/ksmtuned.conf which I have done so it should only enable at 90% but I can see KSM Sharing is already at 30GB

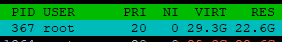

If we take this virtual machine for example it's reserving 22GB but it's been online for < 24 hours and not once since that time did it actually peak at that (24 hour max usage)

it's reserving 22GB but it's been online for < 24 hours and not once since that time did it actually peak at that (24 hour max usage)

Ideally I would like it to reserve what it's using in real time but I know that's not possible with KVM. I don't see why it's reserving more than 10GB it's current usage is only

but I know that's not possible with KVM. I don't see why it's reserving more than 10GB it's current usage is only

Does anyone have experience with how well VMWare ballooning works?

yeah and that's not gonna happen and imho doesn't make much sense. as said before that's rather some optical thing you might find disturbing, but keep in mind it still KSM and swap and balloning etc is part of what is reserved.

so if you have KSM already getting you 30GB thats good, ballooning you won't see displayed in the panel, but obviously the ballooning driver will consume memory inside the VM and while part of the reserved memory of that kvm process it still will be made available for other services.

yeah maybe I remember that wrong and/or lowered it to 60 on my system to kick in earlier, but as always that is a trade-off for some CPU power, as KSM needs some while running.

simple: because it can and therefore always will. e.g. to use it as cache/buffers in your guest. if you think the VM doesn't need that much memory, why give 24G then?

as other pointed out in the end it comes down to your use case. do you want to sell those 24G to others? expect them to use it and make sure they can use it at any point.

obviously things might work better (in terms of averaging) with some more guests that are a bit smaller. I'd anyway still say something like 30% would be a reasonable amount to aim for overcommitting. considering the ARC cache being not part of the oquation that would be ~70GB and you already get 30GB of that through KSM right now. the other 40GB I'd factor in via ballooning or even a bit more.

obviously with that you still want to have at least 80 GB swap available to give enough room for peaks and avoid OOM in case KSM/ballooning don't manage to cover for it.

the system will handle that, no matter what the 'reserved' memory shows per process. don't get mad over a single number there.

@Falzo An update. Proxmox was left running at about 95% RAM usage overnight I woke up and it was around that number still & from the logs I made the night before it doesn't look like any VM returned much memory. The only time I can see RAM being returned is if I set ballooning minimum lower than the memory amount example like this

but the issue I had with that before was even if the RAM usage is below 80% running

free -ginside the VM wont show the full 24GB RAM when the nodes usage was 70% it showed only 10GB and never went up causing crashes so minimum ballooning is not an option.Overselling RAM is pointless, it's incredibly cheap... Honestly, RAM is one of the LEAST expensive components of a server build out. I spec everything with 128GB RAM for all my newer nodes. Going to be upgrading my first 2 nodes from 96 to 128 here soon. Just spend the extra and don't worry about it.

@SWN_Michael

um....

Maybe for non ECC RAM and DDR3 stuff.

RAM is more expensive per GB than HDD/SSD storage.

I do believe in MAXing out my RAM on some systems. But also I have systems that can handle more than 2TB of RAM. So in this case it really DEPENDS on the system it self. But RAM is by far not the cheapest or the closest item that is the cheapest. Even before the whole shortages.

Now to address @Harmony issue. From what I've seen running VMs is that some OSs will pull the max amount of RAM assigned to it. So it will show fully utilized in a system like proxmox but when looking at the VM it self will only show X% utilized.

I said ONE, not the cheapest. I still think you'd agree that it's smart to put in at least 96-128 for a VPS node tho